Intimations of Imitations: Visions of Cellular Prosthesis and Functionally Restorative Medicine – Article by Franco Cortese

In this essay I argue that technologies and techniques used and developed in the fields of Synthetic Ion Channels and Ion-Channel Reconstitution, which have emerged from the fields of supramolecular chemistry and bio-organic chemistry throughout the past 4 decades, can be applied towards the purpose of gradual cellular (and particularly neuronal) replacement to create a new interdisciplinary field that applies such techniques and technologies towards the goal of the indefinite functional restoration of cellular mechanisms and systems, as opposed to their current proposed use of aiding in the elucidation of cellular mechanisms and their underlying principles, and as biosensors.

In earlier essays (see here and here) I identified approaches to the synthesis of non-biological functional equivalents of neuronal components (i.e., ion-channels, ion-pumps, and membrane sections) and their sectional integration with the existing biological neuron — a sort of “physical” emulation, if you will. It has only recently come to my attention that there is an existing field emerging from supramolecular and bio-organic chemistry centered around the design, synthesis, and incorporation/integration of both synthetic/artificial ion channels and artificial bilipid membranes (i.e., lipid bilayer). The potential uses for such channels commonly listed in the literature have nothing to do with life-extension, however, and the field is, to my knowledge, yet to envision the use of replacing our existing neuronal components as they degrade (or before they are able to), rather seeing such uses as aiding in the elucidation of cellular operations and mechanisms and as biosensors. I argue here that the very technologies and techniques that constitute the field (Synthetic Ion Channels & Ion-Channel/Membrane Reconstitution) can be used towards the purposes of indefinite longevity and life-extension through the iterative replacement of cellular constituents (particularly the components comprising our neurons – ion-channels, ion-pumps, sections of bi-lipid membrane, etc.) so as to negate the molecular degradation they would have otherwise eventually undergone.

While I envisioned an electro-mechanical-systems approach in my earlier essays, the field of Synthetic Ion-Channels from the start in the early 1970s applied a molecular approach to the problem of designing molecular systems that produce certain functions according to their chemical composition or structure. Note that this approach corresponds to (or can be categorized under) the passive-physicalist sub-approach of the physicalist-functionalist approach (the broad approach overlying all varieties of physically embodied, “prosthetic” neuronal functional replication) identified in an earlier essay.

The field of synthetic ion channels is also referred to as ion-channel reconstitution, which designates “the solubilization of the membrane, the isolation of the channel protein from the other membrane constituents and the reintroduction of that protein into some form of artificial membrane system that facilitates the measurement of channel function,” and more broadly denotes “the [general] study of ion channel function and can be used to describe the incorporation of intact membrane vesicles, including the protein of interest, into artificial membrane systems that allow the properties of the channel to be investigated” [1]. The field has been active since the 1970s, with experimental successes in the incorporation of functioning synthetic ion channels into biological bilipid membranes and artificial membranes dissimilar in molecular composition and structure to biological analogues underlying supramolecular interactions, ion selectivity, and permeability throughout the 1980s, 1990s, and 2000s. The relevant literature suggests that their proposed use has thus far been limited to the elucidation of ion-channel function and operation, the investigation of their functional and biophysical properties, and to a lesser degree for the purpose of “in-vitro sensing devices to detect the presence of physiologically active substances including antiseptics, antibiotics, neurotransmitters, and others” through the “… transduction of bioelectrical and biochemical events into measurable electrical signals” [2].

Thus my proposal of gradually integrating artificial ion-channels and/or artificial membrane sections for the purpose of indefinite longevity (that is, their use in replacing existing biological neurons towards the aim of gradual substrate replacement, or indeed even in the alternative use of constructing artificial neurons to — rather than replace existing biological neurons — become integrated with existing biological neural networks towards the aim of intelligence amplification and augmentation while assuming functional and experiential continuity with our existing biological nervous system) appears to be novel, while the notion of artificial ion-channels and neuronal membrane systems ion in general had already been conceived (and successfully created/experimentally verified, though presumably not integrated in vivo).

The field of Functionally Restorative Medicine (and the orphan sub-field of whole-brain gradual-substrate replacement, or “physically embodied” brain-emulation, if you like) can take advantage of the decades of experimental progress in this field, incorporating both the technological and methodological infrastructures used in and underlying the field of Ion-Channel Reconstitution and Synthetic/Artificial Ion Channels & Membrane-Systems (and the technologies and methodologies underlying their corresponding experimental-verification and incorporation techniques) for the purpose of indefinite functional restoration via the gradual and iterative replacement of neuronal components (including sections of bilipid membrane, ion channels, and ion pumps) by MEMS (micro-electrocal-mechanical systems) or more likely NEMS (nano-electro-mechanical systems).

The technological and methodological infrastructure underlying this field can be utilized for both the creation of artificial neurons and for the artificial synthesis of normative biological neurons. Much work in the field required artificially synthesizing cellular components (e.g., bilipid membranes) with structural and functional properties as similar to normative biological cells as possible, so that the alternative designs (i.e., dissimilar to the normal structural and functional modalities of biological cells or cellular components) and how they affect and elucidate cellular properties, could be effectively tested. The iterative replacement of either single neurons, or the sectional replacement of neurons with synthesized cellular components (including sections of the bi-lipid membrane, voltage-dependent ion-channels, ligand-dependent ion channels, ion pumps, etc.) is made possible by the large body of work already done in the field. Consequently the technological, methodological, and experimental infrastructures developed for the fields of Synthetic Ion Channels and Ion-Channel/Artificial-Membrane Reconstitution can be utilized for the purpose of (a) iterative replacement and cellular upkeep via biological analogues (or not differing significantly in structure or functional and operational modality to their normal biological counterparts) and/or (b) iterative replacement with non-biological analogues of alternate structural and/or functional modalities.

Rather than sensing when a given component degrades and then replacing it with an artificially-synthesized biological or non-biological analogue, it appears to be much more efficient to determine the projected time it takes for a given component to degrade or otherwise lose functionality, and simply automate the iterative replacement in this fashion, without providing in vivo systems for detecting molecular or structural degradation. This would allow us to achieve both experimental and pragmatic success in such cellular prosthesis sooner, because it doesn’t rely on the complex technological and methodological infrastructure underlying in vivo sensing, especially on the scale of single neuron components like ion-channels, and without causing operational or functional distortion to the components being sensed.

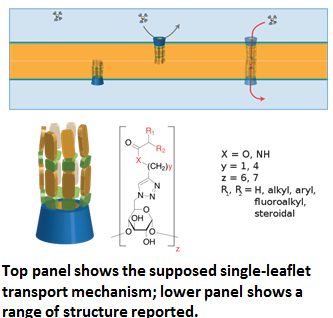

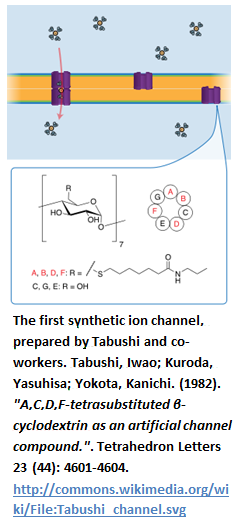

A survey of progress in the field [3] lists several broad design motifs. I will first list the deign motifs falling within the scope of the survey, and the examples it provides. Selections from both papers are meant to show the depth and breadth of the field, rather than to elucidate the specific chemical or kinetic operations under the purview of each design-variety.

For a much more comprehensive, interactive bibliography of papers falling within the field of Synthetic Ion Channels or constituting the historical foundations of the field, see Jon Chui’s online biography here, which charts the developments in this field up until 2011.

First Survey

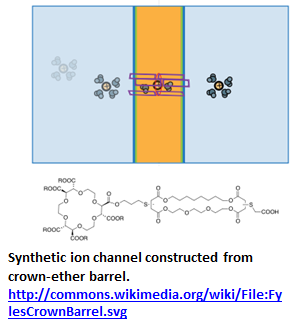

Unimolecular ion channels:

Examples include (a) synthetic ion channels with oligocrown ionophores, [5] (b) using a-helical peptide scaffolds and rigid push–pull p-octiphenyl scaffolds for the recognition of polarized membranes, [6] and (c) modified varieties of the b-helical scaffold of gramicidin A [7].

Barrel-stave supramolecules:

Examples of this general class falling include voltage-gated synthetic ion channels formed by macrocyclic bolaamphiphiles and rigidrod p-octiphenyl polyols [8].

Macrocyclic, branched and linear non-peptide bolaamphiphiles as staves:

Examples of this sub-class include synthetic ion channels formed by (a) macrocyclic, branched and linear bolaamphiphiles, and dimeric steroids, [9] and by (b) non-peptide macrocycles, acyclic analogs, and peptide macrocycles (respectively) containing abiotic amino acids [10].

Dimeric steroid staves:

Examples of this sub-class include channels using polydroxylated norcholentriol dimers [11].

p-Oligophenyls as staves in rigid-rod ß-barrels:

Examples of this sub-class include “cylindrical self-assembly of rigid-rod ß-barrel pores preorganized by the nonplanarity of p-octiphenyl staves in octapeptide-p-octiphenyl monomers” [12].

Synthetic polymers:

Examples of this sub-class include synthetic ion channels and pores comprised of (a) polyalanine, (b) polyisocyanates, (c) polyacrylates, [13] formed by (i) ionophoric, (ii) ‘smart’, and (iii) cationic polymers [14]; (d) surface-attached poly(vinyl-n-alkylpyridinium) [15]; (e) cationic oligo-polymers [16], and (f) poly(m-phenylene ethylenes) [17].

Helical b-peptides (used as staves in barrel-stave method):

Examples of this class include cationic b-peptides with antibiotic activity, presumably acting as amphiphilic helices that form micellar pores in anionic bilayer membranes [18].

Monomeric steroids:

Examples of this sub-class include synthetic carriers, channels and pores formed by monomeric steroids [19], synthetic cationic steroid antibiotics that may act by forming micellar pores in anionic membranes [20], neutral steroids as anion carriers [21], and supramolecular ion channels [22].

Complex minimalist systems:

Examples of this sub-class falling within the scope of this survey include ‘minimalist’ amphiphiles as synthetic ion channels and pores [23], membrane-active ‘smart’ double-chain amphiphiles, expected to form ‘micellar pores’ or self-assemble into ion channels in response to acid or light [24], and double-chain amphiphiles that may form ‘micellar pores’ at the boundary between photopolymerized and host bilayer domains and representative peptide conjugates that may self-assemble into supramolecular pores or exhibit antibiotic activity [25].

Non-peptide macrocycles as hoops:

Examples of this sub-class falling within the scope of this survey include synthetic ion channels formed by non-peptide macrocycles acyclic analogs [26] and peptide macrocycles containing abiotic amino acids [27].

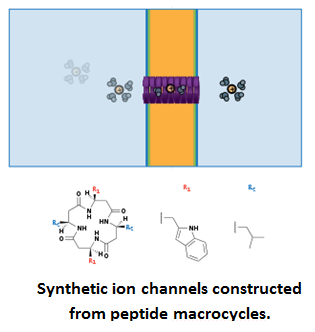

Peptide macrocycles as hoops and staves:

Examples of this sub-class include (a) synthetic ion channels formed by self-assembly of macrocyclic peptides into genuine barrel-hoop motifs that mimic the b-helix of gramicidin A with cyclic ß-sheets. The macrocycles are designed to bind on top of channels and cationic antibiotics (and several analogs) are proposed to form micellar pores in anionic membranes [28]; (b) synthetic carriers, antibiotics (and analogs), and pores (and analogs) formed by macrocyclic peptides with non-natural subunits. Certain macrocycles may act as ß-sheets, possibly as staves of ß-barrel-like pores [29]; (c) bioengineered pores as sensors. Covalent capturing and fragmentations have been observed on the single-molecule level within engineered a-hemolysin pore containing an internal reactive thiol [30].

Summary

Thus even without knowledge of supramolecular or organic chemistry, one can see that a variety of alternate approaches to the creation of synthetic ion channels, and several sub-approaches within each larger ‘design motif’ or broad-approach, not only exist but have been experimentally verified, varietized, and refined.

Second Survey

The following selections [31] illustrate the chemical, structural, and functional varieties of synthetic ions categorized according to whether they are cation-conducting or anion-conducting, respectively. These examples are used to further emphasize the extent of the field, and the number of alternative approaches to synthetic ion-channel design, implementation, integration, and experimental verification already existent. Permission to use all the following selections and figures was obtained from the author of the source.

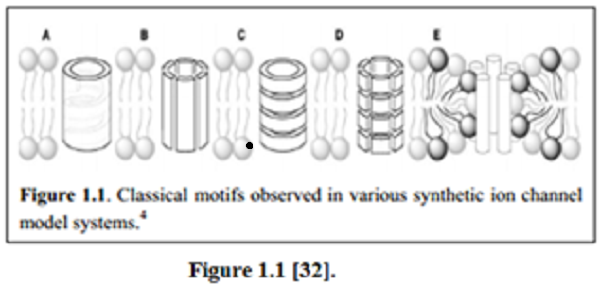

There are 6 classical design-motifs for synthetic ion-channels, categorized by structure, that are identified within the paper:

A: Unimolecular macromolecules,

B: Complex barrel-stave,

C: Barrel-rosette,

D: Barrel hoop, and

E: Micellar supramolecules.

Cation Conducting Channels:

UNIMOLECULAR

“The first non-peptidic artificial ion channel was reported by Kobuke et al. in 1992” [33].

“The channel contained “an amphiphilic ion pair consisting of oligoether-carboxylates and mono– (or di-) octadecylammoniumcations. The carboxylates formed the channel core and the cations formed the hydrophobic outer wall, which was embedded in the bilipid membrane with a channel length of about 24 to 30 Å. The resultant ion channel, formed from molecular self-assembly, is cation-selective and voltage-dependent” [34].

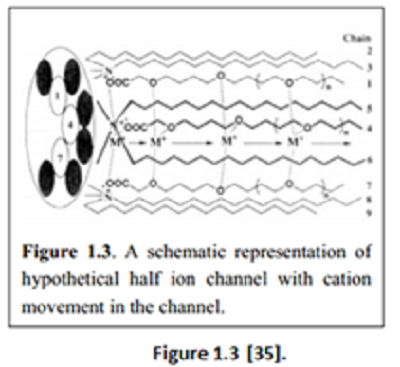

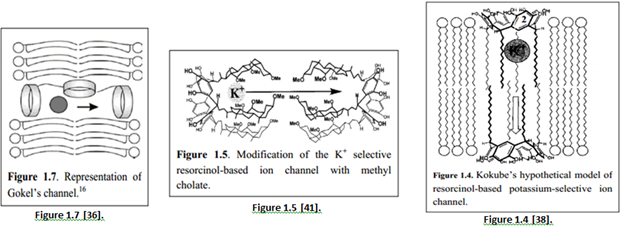

“Later, Kokube et al. synthesized another channel comprising of resorcinol-based cyclic tetramer as the building block. The resorcin-[4]-arenemonomer consisted of four long alkyl chains which aggregated to form a dimeric supramolecular structure resembling that of Gramicidin A” [35]. “Gokel et al. had studied [a set of] simple yet fully functional ion channels known as “hydraphiles” [39].

“An example (channel 3) is shown in Figure 1.6, consisting of diaza-18-crown-6 crown ether groups and alkyl chains as side arms and spacers. Channel 3 is capable of transporting protons across the bilayer membrane” [40].

“A covalently bonded macrotetracycle (Figure 1.8) had shown to be about three times more active than Gokel’s ‘hydraphile’ channel, and its amide-containing analogue also showed enhanced activity” [44].

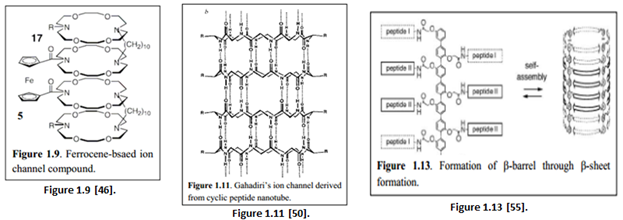

“Inorganic derivative using crown ethers have also been synthesized. Hall et al. synthesized an ion channel consisting of a ferrocene and 4 diaza-18-crown-6 linked by 2 dodecyl chains (Figure 1.9). The ion channel was redox-active as oxidation of the ferrocene caused the compound to switch to an inactive form” [45].

B-STAVES:

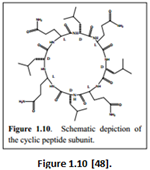

“These are more difficult to synthesize [in comparison to unimolecular varieties] because the channel formation usually involves self-assembly via non-covalent interactions” [47].“A cyclic peptide composed of even number of alternating D– and L-amino acids (Figure 1.10) was suggested to form barrel-hoop structure through backbone-backbone hydrogen bonds by De Santis” [49].

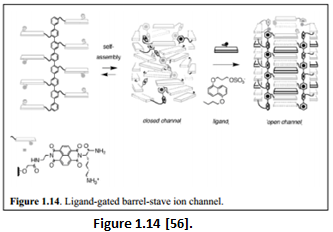

“A tubular nanotube synthesized by Ghadiri et al. consisting of cyclic D and L peptide subunits form a flat, ring-shaped conformation that stack through an extensive anti-parallel ß-sheet-like hydrogen bonding interaction (Figure 1.11)” [51].

“Experimental results have shown that the channel can transport sodium and potassium ions. The channel can also be constructed by the use of direct covalent bonding between the sheets so as to increase the thermodynamic and kinetic stability” [52].

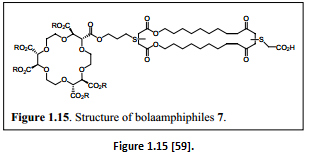

“By attaching peptides to the octiphenyl scaffold, a ß-barrel can be formed via self-assembly through the formation of ß-sheet structures between the peptide chains (Figure 1.13)” [53].

“The same scaffold was used by Matile et al. to mimic the structure of macrolide antibiotic amphotericin B. The channel synthesized was shown to transport cations across the membrane” [54].

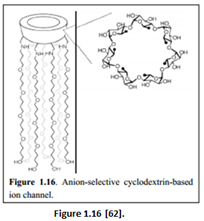

“Attaching the electron-poor naphthalene diimide (NDIs) to the same octiphenyl scaffold led to the hoop-stave mismatch during self-assembly that results in a twisted and closed channel conformation (Figure 1.14). Adding the complementary dialkoxynaphthalene (DAN) donor led to the cooperative interactions between NDI and DAN that favors the formation of barrel-stave ion channel.” [57].

MICELLAR

“These aggregate channels are formed by amphotericin involving both sterols and antibiotics arranged in two half-channel sections within the membrane” [58].

“An active form of the compound is the bolaamphiphiles (two-headed amphiphiles). Figure 1.15 shows an example that forms an active channel structure through dimerization or trimerization within the bilayer membrane. Electrochemical studies had shown that the monomer is inactive and the active form involves dimer or larger aggregates” [60].

ANION CONDUCTING CHANNELS:

“A highly active, anion selective, monomeric cyclodextrin-based ion channel was designed by Madhavan et al. (Figure 1.16). Oligoether chains were attached to the primary face of the ß-cyclodextrin head group via amide bonds. The hydrophobic oligoether chains were chosen because they are long enough to span the entire lipid bilayer. The channel was able to select “anions over cations” and “discriminate among halide anions in the order I- > Br- > Cl- (following Hofmeister series)” [61].

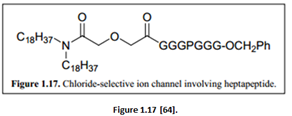

“The anion selectivity occurred via the ring of ammonium cations being positioned just beside the cyclodextrin head group, which helped to facilitate anion selectivity. Iodide ions were transported the fastest because the activation barrier to enter the hydrophobic channel core is lower for I- compared to either Br- or Cl-” [62]. “A more specific artificial anion selective ion channel was the chloride selective ion channel synthesized by Gokel. The building block involved a heptapeptide with Proline incorporated (Figure 1.17)” [63].

Cellular Prosthesis: Inklings of a New Interdisciplinary Approach

The paper cites “nanoreactors for catalysis and chemical or biological sensors” and “interdisciplinary uses as nano –filtration membrane, drug or gene delivery vehicles/transporters as well as channel-based antibiotics that may kill bacterial cells preferentially over mammalian cells” as some of the main applications of synthetic ion-channels [65], other than their normative use in elucidating cellular function and operation.

However, I argue that a whole interdisciplinary field and heretofore-unrecognized new approach or sub-field of Functionally Restorative Medicine is possible through taking the technologies and techniques involved in constructing, integrating, and experimentally verifying either (a) non-biological analogues of ion-channels and ion-pumps (thus trans-membrane membrane proteins in general, also sometimes referred to as transport proteins or integral membrane proteins) and membranes (which include normative bilipid membranes, non-lipid membranes and chemically-augmented bilipid membranes), and (b) the artificial synthesis of biological analogues of ion-channels, ion-pumps and membranes, which are structurally and chemically equivalent to naturally-occurring biological components but which are synthesized artificially – and applying such technologies and techniques toward the purpose the gradual replacement of our existing biological neurons constituting our nervous systems – or at least those neuron-populations that comprise the neocortex and prefrontal cortex, and through iterative procedures of gradual replacement thereby achieving indefinite longevity. There is still work to be done in determining the comparative advantages and disadvantages of various structural and functional (i.e., design) motifs, and in the logistics of implanting the iterative replacement or reconstitution of ion-channels, ion-pumps and sections of neuronal membrane in vivo.

The conceptual schemes outlined in Concepts for Functional Replication of Biological Neurons [66], Gradual Neuron Replacement for the Preservation of Subjective-Continuity [67] and Wireless Synapses, Artificial Plasticity, and Neuromodulation [68] would constitute variations on the basic approach underlying this proposed, embryonic interdisciplinary field. Certain approaches within the fields of nanomedicine itself, particularly those approaches that constitute the functional emulation of existing cell-types, such as but not limited to Robert Freitas’s conceptual designs for the functional emulation of the red blood cell (a.k.a. erythrocytes, haematids) [69], i.e., the Resperocyte, itself should be seen as falling under the purview of this new approach, although not all approaches to Nanomedicine (diagnostics, drug-delivery and neuroelectronic interfacing) constitute the physical (i.e. electromechanical, kinetic, and/or molecular physically embodied) and functional emulation of biological cells.

The field of functionally-restorative medicine in general (and of nanomedicine in particular) and the fields of supramolecular and organic chemistry converge here, where these technological, methodological, and experimental infrastructures developed in the fields of Synthetic Ion-Channels and Ion Channel Reconstitution can be employed to develop a new interdisciplinary approach that applies the logic of prosthesis to the cellular and cellular-component (i.e., sub-cellular) scale; same tools, new use. These techniques could be used to iteratively replace the components of our neurons as they degrade, or to replace them with more robust systems that are less susceptible to molecular degradation. Instead of repairing the cellular DNA, RNA, and protein transcription and synthesis machinery, we bypass it completely by configuring and integrating the neuronal components (ion-channels, ion-pumps, and sections of bilipid membrane) directly.

Thus I suggest that theoreticians of nanomedicine look to the large quantity of literature already developed in the emerging fields of synthetic ion-channels and membrane-reconstitution, towards the objective of adapting and applying existing technologies and methodologies to the new purpose of iterative maintenance, upkeep and/or replacement of cellular (and particularly neuronal) constituents with either non-biological analogues or artificially synthesized but chemically/structurally equivalent biological analogues.

This new sub-field of Synthetic Biology needs a name to differentiate it from the other approaches to Functionally Restorative Medicine. I suggest the designation ‘cellular prosthesis’.

References:

[1] Williams (1994)., An introduction to the methods available for ion channel reconstitution. in D.C Ogden Microelectrode techniques, The Plymouth workshop edition, CambridgeCompany of Biologists.

[2] Tomich, J., Montal, M. (1996). U.S Patent No. 5,16,890. Washington, DC: U.S. Patent and Trademark Office.

[3] Matile, S., Som, A., & Sorde, N. (2004). Recent synthetic ion channels and pores. Tetrahedron, 60(31), 6405–6435. ISSN 0040–4020, 10.1016/j.tet.2004.05.052. Access: http://www.sciencedirect.com/science/article/pii/S0040402004007690:

[4] XIAO, F., (2009). Synthesis and structural investigations of pyridine-based aromatic foldamers.

[5] Ibid., p. 6411.

[6] Ibid., p. 6416.

[7] Ibid., p. 6413.

[8] Ibid., p. 6412.

[9] Ibid., p. 6414.

[10] Ibid., p. 6425.

[11] Ibid., p. 6427.

[12] Ibid., p. 6416.

[13] Ibid., p. 6419.

[14] Ibid.

[15] Ibid.

[16] Ibid., p. 6419.

[17] Ibid.

[18] Ibid., p. 6421.

[19] Ibid., p. 6422.

[20] Ibid.

[21] Ibid.

[22] Ibid.

[23] Ibid., p. 6423.

[24] Ibid.

[25] Ibid.

[26] Ibid., p. 6426.

[27] Ibid.

[28] Ibid., p. 6427.

[29] Ibid., p. 6327.

[30] Ibid., p. 6427.

[31] XIAO, F. (2009). Synthesis and structural investigations of pyridine-based aromatic foldamers.

[32] Ibid., p. 4.

[33] Ibid.

[34] Ibid.

[35] Ibid.

[36] Ibid., p. 7.

[37] Ibid., p. 8.

[38] Ibid., p. 7.

[39] Ibid.

[40] Ibid.

[41] Ibid.

[42] Ibid.

[43] Ibid., p. 8.

[44] Ibid.

[45] Ibid., p. 9.

[46] Ibid.

[47] Ibid.

[48] Ibid., p. 10.

[49] Ibid.

[50] Ibid.

[51] Ibid.

[52] Ibid., p. 11.

[53] Ibid., p. 12.

[54] Ibid.

[55] Ibid.

[56] Ibid.

[57] Ibid.

[58] Ibid., p. 13.

[59] Ibid.

[60] Ibid., p. 14.

[61] Ibid.

[62] Ibid.

[63] Ibid., p. 15.

[64] Ibid.

[65] Ibid.

[66] Cortese, F., (2013). Concepts for Functional Replication of Biological Neurons. The Rational Argumentator. Access: https://www.rationalargumentator.com/index/blog/2013/05/gradual-neuron-replacement/

[67] Cortese, F., (2013). Gradual Neuron Replacement for the Preservation of Subjective-Continuity. The Rational Argumentator. Access: https://www.rationalargumentator.com/index/blog/2013/05/gradual-neuron-replacement/

[68] Cortese, F., (2013). Wireless Synapses, Artificial Plasticity, and Neuromodulation. The Rational Argumentator. Access: https://www.rationalargumentator.com/index/blog/2013/05/wireless-synapses/

[69] Freitas Jr., R., (1998). “Exploratory Design in Medical Nanotechnology: A Mechanical Artificial Red Cell”. Artificial Cells, Blood Substitutes, and Immobil. Biotech. (26): 411–430. Access: http://www.ncbi.nlm.nih.gov/pubmed/9663339